This case study describes the complete steps from root cause analysis to resolution of a sudden OutOfMemoryError problem experienced with Oracle Service Bus 2.6 running on Weblogic Server 9.2.

This case study will also demonstrate how you combine Thread Dump analysis and Solaris PRSTAT to pinpoint culprit Thread(s) consuming large amount of memory on your application Java Heap.

Environment specifications

- Java EE server: Oracle Service Bus 2.6 & Weblogic Server 9.2

- OS: Solaris 10

- JDK: Sun HotSpot 1.5 SR7

- RDBMS: Oracle 10g

- Platform type: Enterprise Service Bus

Troubleshooting tools

- Quest Foglight for Java EE

- Java VM Thread Dump (for stuck Thread analysis)

- Solaris PRSTAT (for Thread CPU contributors breakdown)

Problem overview

- Problem type: OutOfMemoryError

An OutOfMemoryError problem was observed in our Oracle Service Bus Weblogic logs along with major performance degradation.

Preventive restart was then implemented since a Java Heap memory leak was initially suspected which did not prove to be efficient.

Gathering and validation of facts

As usual, a Java EE problem investigation requires gathering of technical and non technical facts so we can either derived other facts and/or conclude on the root cause. Before applying a corrective measure, the facts below were verified in order to conclude on the root cause:

· What is the client impact? HIGH

· Recent change of the affected platform? No

· Any recent traffic increase to the affected platform? No

· Since how long this problem has been observed? Since several months with no identified root cause

· Is the OutOfMemoryError happening suddenly or over time? It was observed that the Java Heap is suddenly increasing and can then take several hours before failing with a OutOfMemoryError

· Did a restart of the Weblogic server resolve the problem? No, preventive restart was attempted which did not prove to be efficient

- Conclusion #1: The sudden increase of the Java Heap could indicate long running Thread(s) consuming a large amount of memory in a short amount of time

- Conclusion #2: A Java Heap leak cannot be ruled out at this point

Java Heap monitoring using Foglight

Quest Foglight monitoring tool was used to monitor the trend of the Oracle Service Bus Java Heap for each of the managed servers. You can see below a sudden increase of the Java Heap OldGen space can be observed.

This type of memory problem normally does not indicate a linear or long term memory leak but rather rogue Thread(s) consuming large amount of memory in a short amount of time. In this scenario, my recommendation to you is to first perform Thread Dump analysis before jumping too quickly to JVM Heap Dump analysis.

Thread Dump analysis

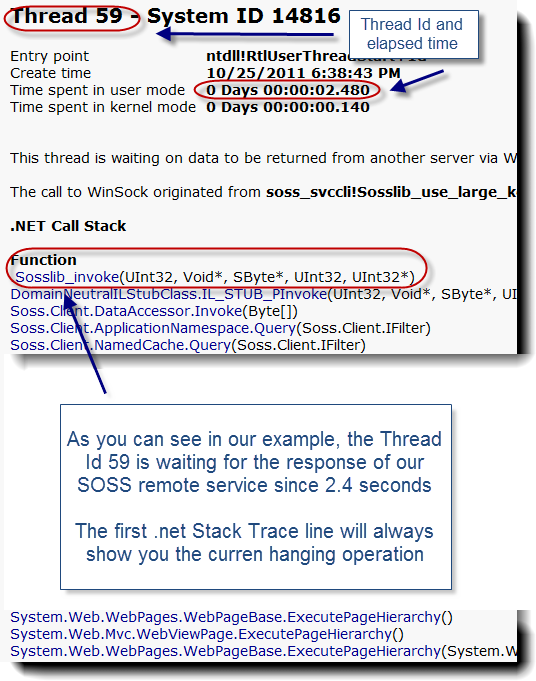

Thread Dump snapshots were generated during the sudden Java Heap increase and analysis did reveal a primary culprit as you can see below.

Analysis of the different Thread Dump snapshots did reveal a very long elapsed time of this Thread along with a perfect correlation with our Java Heap increase. As you can see, this Thread is triggered by the ALSB alerting layer and is involved in read/write of the Weblogic internal diagnostic File Store.

"pool-2-thread-1" prio=10 tid=0x01952650 nid=0x4c in Object.wait() [0x537fe000..0x537ff8f0]

at java.lang.Object.wait(Native Method)

at java.lang.Object.wait(Object.java:474)

at weblogic.common.CompletionRequest.getResult(CompletionRequest.java:109)

- locked <0xf06ca710> (a weblogic.common.CompletionRequest)

at weblogic.diagnostics.archive.wlstore.PersistentStoreDataArchive.readRecord(PersistentStoreDataArchive.java:657)

at weblogic.diagnostics.archive.wlstore.PersistentStoreDataArchive.readRecord(PersistentStoreDataArchive.java:630)

at weblogic.diagnostics.archive.wlstore.PersistentRecordIterator.fill(PersistentRecordIterator.java:87)

at weblogic.diagnostics.archive.RecordIterator.fetchMore(RecordIterator.java:157)

at weblogic.diagnostics.archive.RecordIterator.hasNext(RecordIterator.java:130)

at com.bea.wli.monitoring.alert.AlertLoggerImpl.getAlerts(AlertLoggerImpl.java:157)

at com.bea.wli.monitoring.alert.AlertLoggerImpl.updateAlertEvalTime(AlertLoggerImpl.java:140)

at com.bea.wli.monitoring.alert.AlertLog.updateAlertEvalTime(AlertLog.java:248)

at com.bea.wli.monitoring.alert.AlertManager._evaluateSingleRule(AlertManager.java:992)

at com.bea.wli.monitoring.alert.AlertManager.intervalCompleted(AlertManager.java:809)

at com.bea.wli.monitoring.alert.AlertEvalTask.execute(AlertEvalTask.java:65)

at com.bea.wli.monitoring.alert.AlertEvalTask.run(AlertEvalTask.java:37)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:417)

at java.util.concurrent.FutureTask$Sync.innerRun(FutureTask.java:269)

at java.util.concurrent.FutureTask.run(FutureTask.java:123)

at java.util.concurrent.ThreadPoolExecutor$Worker.runTask(ThreadPoolExecutor.java:650)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:675)

at java.lang.Thread.run(Thread.java:595)

Solaris PRSTAT

Solaris PRSTAT was also captured in order to understand the amount of CPU consumed and determine if this Thread was stuck or performing a lot of processing.

As you can see below, Thread id #76 was identified as the primary CPU contributor with 8.6 %.

prstat –L <Java Pid>

PID USERNAME SIZE RSS STATE PRI NICE TIME CPU PROCESS/LWPID

9116 bea 3109M 2592M sleep 59 0 21:52:59 8.6% java/76

9116 bea 3109M 2592M sleep 57 0 4:28:05 0.3% java/40

9116 bea 3109M 2592M sleep 59 0 6:52:02 0.3% java/10774

9116 bea 3109M 2592M sleep 59 0 6:50:00 0.3% java/84

9116 bea 3109M 2592M sleep 58 0 4:27:20 0.2% java/43

9116 bea 3109M 2592M sleep 59 0 7:39:42 0.2% java/41287

9116 bea 3109M 2592M sleep 59 0 3:41:44 0.2% java/30503

9116 bea 3109M 2592M sleep 59 0 5:48:32 0.2% java/36116

9116 bea 3109M 2592M sleep 59 0 6:15:52 0.2% java/36118

9116 bea 3109M 2592M sleep 59 0 2:44:02 0.2% java/36128

9116 bea 3109M 2592M sleep 59 0 5:53:50 0.1% java/36111

9116 bea 3109M 2592M sleep 59 0 4:27:55 0.1% java/55

9116 bea 3109M 2592M sleep 59 0 9:51:19 0.1% java/23479

9116 bea 3109M 2592M sleep 59 0 4:57:33 0.1% java/36569

9116 bea 3109M 2592M sleep 59 0 9:51:08 0.1% java/23477

9116 bea 3109M 2592M sleep 59 0 10:15:13 0.1% java/4339

9116 bea 3109M 2592M sleep 59 0 10:13:37 0.1% java/4331

9116 bea 3109M 2592M sleep 59 0 4:58:37 0.1% java/36571

9116 bea 3109M 2592M sleep 59 0 3:13:46 0.1% java/41285

9116 bea 3109M 2592M sleep 59 0 4:27:32 0.1% java/48

9116 bea 3109M 2592M sleep 59 0 5:25:28 0.1% java/30733

Thread Dump and PRSTAT correlation approach

In order to correlate the PRSTAT data please follow the steps below:

- Identify the primary culprit / contributor Thread(s) and locate the Thread Id (DECIMAL format) from the last column

- Convert the decimal value to HEXA since Thread Dump native Thread id is in HEXA format

- Search from your captured Thread Dump data and locate the Thread native Id >> nid=<Thread Id HEXA>

For our case study:

- Thread Id #76 identified as the primary culprit

- Thread Id #76 was converted to HEXA format >> 0x4c

- Thread Dump data Thread native Id >> nid=0x4c

Such correlation and finding did confirm that this rogue Thread was actively processing (reading/writing to the File Store) and allocating a large amount of memory on the Java Heap in a short amount of time.

Root cause

The above analysis along with Mark Smith Oracle Blog article finding did confirm the root cause.

Oracle Service Bus allows Alert actions to be configured within the message flow (pipeline alerts). These pipeline alert actions generate alerts based on message context in a pipeline, to send to an alert destination. Such alert destination is the actual Weblogic diagnostic File Store which means this structure will grow over time depending of the volume of Alerts that your OSB application is generating.

It is located under >> //domain_name/servers/server_name/data/store/diagnostics/

In our case, the File Store size was around 800 MB.

Such increase of the diagnostic File Store size over time is leading to an increase of elapsed time of the Thread involved in read/write operations; allocating large amount of memory on the Java Heap. Such memory cannot be garbage collected until Thread completion which is leading to OutOfMemoryError and performance degradation.

Solution

2 actions were required to resolve this problem:

- Reset the diagnostic File Store by renaming the existing data file and forcing Weblogic to create a fresh one

- Review and reduce the Oracle Service Bus alerting level to the minimum acceptable level

The reset of the diagnostic File Store did bring some immediate relief and by ensuring short and optimal diagnostic File Store operations and not causing too much impact on the Java Heap.

The level of OSB alerting is still in review and will be reduced shortly in order to prevent this problem at the source.

Regular monitoring and purging of the diagnostic File Store will also be implemented in order to prevent such performance degradation going forward; as per Oracle recommendations.

Conclusion and recommendations

I hope this article has helped you understand how to identify and resolve this Oracle service bus problem and appreciate how powerful Thread Dump analysis and Solaris PRSTAT tool can be to pinpoint this type of OutOfMemoryError problem.

Please do not hesitate to post any comment or question.