This article will also provide you with proper AIX OS commands you can use to troubleshoot and validate the File Descriptor configuration of your Java VM process.

Environment specifications

- Java EE server: Oracle Service Bus 11g

- Middleware OS: IBM AIX 6.1

- Java VM: IBM JRE 1.6.0 SR9 – 64 bit

- Platform type: Service Bus – Middle Tier

Problem overview

- Problem type: java.net.SocketException: Too many open files error was observed under heavy load causing our Oracle OSB managed servers to suddenly hang

Such problem was observed only during high load and did require our support team to take corrective action e.g. shutdown and restart the affected Weblogic OSB managed servers

Gathering and validation of facts

As usual, a Java EE problem investigation requires gathering of technical and non technical facts so we can either derived other facts and/or conclude on the root cause. Before applying a corrective measure, the facts below were verified in order to conclude on the root cause:

· What is the client impact? HIGH; Full JVM hang

· Recent change of the affected platform? Yes, recent migration from ALSB 2.6 (Solaris OS) to Oracle OSB 11g (AIX OS)

· Any recent traffic increase to the affected platform? No

· What is the health of the Weblogic server? Affected managed servers were no longer responsive along with closure of the Weblogic HTTP (Server Socket) port

· Did a restart of the Weblogic Integration server resolve the problem? Yes but temporarily only

- Conclusion #1: The problem appears to be load related

Weblogic server log files review

A quick review of the affected managed servers log did reveal the error below:

java.net.SocketException: Too many open files

This error indicates that our Java VM process was running out of File Descriptor. This is a severe condition that will affect the whole Java VM process and cause Weblogic to close its internal Server Socket port (HTTP/HTTPS port) preventing any further inbound & outbound communication to the affected managed server(s).

File Descriptor – Why so important for an Oracle OSB environment?

The File Descriptor capacity is quite important for your Java VM process. The key concept you must understand is that File Descriptors are not only required for pure File Handles but also for inbound and outbound Socket communication. Each new Java Socket created to (inbound) or from (outound) your Java VM by Weblogic kernel Socket Muxer requires a File Descriptor allocation at the OS level.

An Oracle OSB environment can require a significant number of Sockets depending how much inbound load it receives and how much outbound connections (Java Sockets) it has to create in order to send and receive data from external / downstream systems (System End Points).

For that reason, you must ensure that you allocate enough File Descriptors / Sockets to your Java VM process in order to support your daily load; including problematic scenarios such as sudden slowdown of external systems which typically increase the demand on the File Descriptor allocation.

Runtime File Descriptor capacity check for Java VM and AIX OS

Following the discovery of this error, our technical team did perform a quick review of the current observed runtime File Descriptor capacity & utilization of our OSB Java VM processes. This can be done easily via the AIX procfiles <Java PID> | grep rlimit & lsof -p <Java PID> | wc –l commands as per below example:

## Java VM process File Descriptor total capacity

>> procfiles 5425732 | grep rlimit

Current rlimit: 2000 file descriptors

## Java VM process File Descriptor current utilization

>> lsof -p <Java PID> | wc –l

1920

As you can see, the current capacity was found at 2000; which is quite low for a medium size Oracle OSB environment. The average utilization under heavy load was also found to be quite close to the upper limit of 2000.

The next step was to verify the default AIX OS File Descriptor limit via the ulimit -S –n command:

>> ulimit -S –n

2000

- Conclusion #2: The current File Descriptor limit for both OS and OSB Java VM appears to be quite low and setup at 2000. The File Descriptor utilization was also found to be quite close to the upper limit which explains why so many JVM failures were observed at peak load

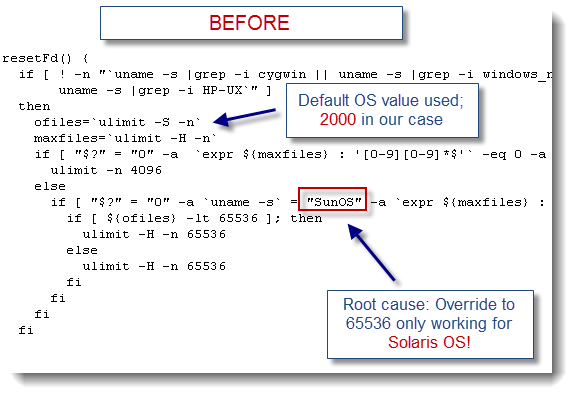

Weblogic File Descriptor configuration review

The File Descriptor limit can typically be overwritten when you start your Weblogic Java VM. Such configuration is managed by the WLS core layer and script can be found at the following location:

<WL_HOME>/wlserver_10.3/common/bin/commEnv.sh

..................................................

resetFd() {

if [ ! -n "`uname -s |grep -i cygwin || uname -s |grep -i windows_nt || \

uname -s |grep -i HP-UX`" ]

then

ofiles=`ulimit -S -n`

maxfiles=`ulimit -H -n`

if [ "$?" = "0" -a `expr ${maxfiles} : '[0-9][0-9]*$'` -eq 0 -a `expr ${ofiles} : '[0-9][0-9]*$'` -eq 0 ]; then

ulimit -n 4096

else

if [ "$?" = "0" -a `uname -s` = "SunOS" -a `expr ${maxfiles} : '[0-9][0-9]*$'` -eq 0 ]; then

if [ ${ofiles} -lt 65536 ]; then

ulimit -H -n 65536

else

ulimit -H -n 4096

fi

fi

fi

fi

.................................................

Root cause: File Descriptor override only working for Solaris OS!

As you can see with the script screenshot below, the override of the File Descriptor limit via ulimit is only applicable for Solaris OS (SunOS) which explains why our current OSB Java VM running on AIX OS did end up with the default value of 2000 vs. our older ALSB 2.6 environment running on Solaris OS which had a File Descriptor limit of 65536.

Solution: script tweaking for AIX OS

The resolution of this problem was done by modifying the Weblogic commEnv script as per below. This change did ensure a configuration of 65536 File Descriptor (from 2000); including for the AIX OS:

** Please note that the activation of any change to the Weblogic File Descriptor configuration requires a restart of both the Node Manager (if used) along with the managed servers. **

A runtime validation was also performed following the activation of the new configuration which did confirm the new active File Descriptor limit:

>> procfiles 6416839 | grep rlimit

Current rlimit: 65536 file descriptors

No failure has been observed since then.

Conclusion and recommendations

- When upgrading your Weblogic Java EE container to a new version, please ensure that you verify your current File Descriptor limit as per the above case study

- From a capacity planning perspective, please ensure that you monitor your File Descriptor utilizaiton on a regular basis in order to identify any potential capacity problem, Socket leak etc..

Please don’t hesitate to post any comment or question on this subject if you need any additional help.

6 comments:

Great post.

Our application is managing many socket connections with many of them which doesn't terminate nicely (many sockets in CLOSE_WAIT state seen with lsof -i).

The error message "too many open files" is often seen although the ulimit has been raised to a higher value.

Let's test it and push it to production.

Thanks.

Hi Deschrevel,

A surge of CLOSE_WAIT can be an indicating that your application is not managing the sockets/HTTP and other related protocols properly. Lack of timeouts is one of the most common issue.

Increasing Socket capacity can help in short term and I highly recommend that you identify the source of these sockets, then perform an application code review in order to pinpoint the root cause.

Thanks.

P-H

Thank you for this post..

I've expierenced this situation today..

and it's helpful to me to find out the root cause of problem.

Wish you luck~

Hi Pierre,

We are having an issue with OSB 10.3.1. Under heavy load the java process of the managed server(we have 2 managed server running in cluster) increase too fast the number of IDLE sockets.For this reason we need to restart the managed server 3 times peer week. Did you know what coul be the cause?. In my mind there are two things could cause this mess. Tunning the value of tcp_keepalive_interval and the other one with the Socket Readers implementation, that the native option isn't enable and the servers are using the java option.

Thanks in advice

Luis Aviña

Hi Luis,

OSB relies heavy on SocketMuxer and async. sockets for inbound and outbound communications. Your problem sounds like a Socket leak. OS level tuning won't help if those Sockets are still referenced by the OSB/WLS kernel. This can happen if you have timeout related problems for inbound and/or outbound requests.

Please see below my recommendations:

- Generate a few Thread Dump snaphosts per day (or look at WLS thread monitoring view) and determine if the # of threads is also increasing

- Generate a few netstat snapshots per day and determine which pool of Socket is leaking e.g. inbound related sockets or outbound related sockets. Once identified, identify the source and destination IP addresses these Sockets are related to. Also, check the state of these Sockets e.g. ESTABLISHED, CLOSE_WAIT etc.

Once you get the above information, please update me with the data so we can take it from there.

Regards,

P-H

Hi P-H,

Great post.

We have a problem with ulimit parameter in Weblogic 11g hosted in Linux. Soft andbHard ULIMIT values 65k each. However Weblogic is not taking those values and it is considering default value 1024 (as verified in /proc//limits ) for both soft and hard ulimit values. This is causing too many open files erros in my application.

Please advise. Thanks for your help.

Thanks,

Pappu

Post a Comment